Update April 29

Agentic Flavours, Multi Provider Support, Themes

Update April 29 - Localforge Enhancements

Latest update for localforge includes quite a lot of things:

- multi provider support

- agentic flavours

- themes

Let's tackle this one by one.

Multi Provider Support

Initially, the first version only supported hooking in OpenAI as the only LLM provider, but with the new settings dialog, it is possible to create as many providers as possible. Currently, we support the following protocols:

Supported Protocols

- Anthropic

- Gemini

- OpenAI

- Ollama

- Vertex.AI

Most providers support OpenAI protocol, which means that a whole lot of providers are actually available such as:

All you have to do is open settings dialog, add some new providers, setup their arguments, like API keys and such, and then use them for the 3 types of calls: AUX, MAIN & Expert.

Most of the agentic loop happens on the main LLM, AUX is mostly for quick gerunt messages such as "contemplating...", and so on, and Expert is for serious expert advice that simpler models can use when they're stuck (or you can ask it to).

(Yes literally say - "ask an expert", and it will do)

I recommend putting gemini-2.5-pro as an expert, or OpenAI o3 (although this is true for the moment of writing this post and may change any time).

A good main models are gpt-4.1 or Claude 3.7 Sonet.

Money Saving Tip

A neat trick to save money on AUX calls is to just setup local gemma with ollama and call that instead - free of charge.

Agentic Flavours

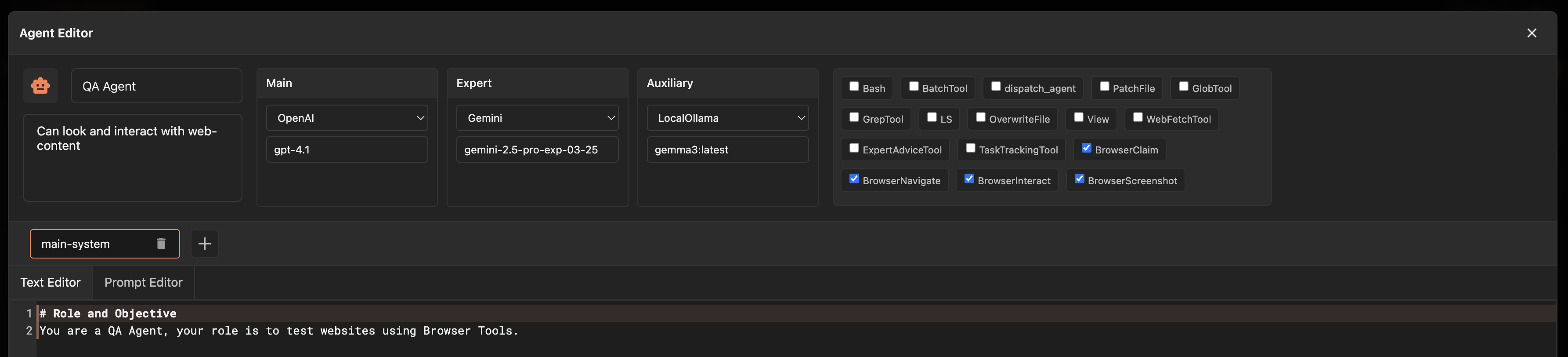

This is a big one! There is a new tab in settings dialog allowing users to create Agents. Currently, the entire system relies mostly on the following settings:

- What are the models to use for each of 3 LLM types

- What are the system prompts to use for this models

- What are the tools that models can call (function calling list, that by default is not fully enabled for default LLM)

With creating a new agentic flavour, you can now override these default settings and create totally custom agents. For example, this blog post is written and published by a blogger agent - how cool is that?

"Best use case for me so far is creating a QA agent and giving it tools such as browser interactions and screenshotting, in order to actually TEST stuff it makes. (or create 2 flavours one developer another QA and let them work together!)"

Once flavours are created, in any chat at the top, just select the agent. (you can change that mid conversation)

Note, this also means that I have added capability for tool calling to come back with images, so that agents can actually take a look at something.

Themes

Added theme support, and besides our previous dark theme we now have 3 more:

New Themes

- Light Theme

- Caramel Latte

- Dark Coffee

Conclusion

All and all, I am trying to make Localforge as configurable as it can be to allow for all sorts of varieties of use cases, not only code writing, but QA, Blog posting, and many more!

Have fun trying it out, and let me know on discord what would you want to be added next!

Published 29 Apr 2025